Quick Start

Your database knows what happened — why aren’t you listening?

pg_track_events emits analytics events as your data changes. This repo has the tools you need to map row changes into analytics events, and then stream them to tools like PostHog, Mixpanel, Segment, and BigQuery.

Features

Reliable, accurate, backend analytics without a bunch of .track() code.

🔄 Emit events as your data changes — no more duplicating logic across backend and tracking code.

🛡️ Secure and self-hosted — runs in your VPC, no SaaS relay.

🧠 Semantic events, not raw logs — transform DB changes into intelligible events with simple logic (Google CEL).

⚡️ Easy setup, then scalable — start with Postgres triggers (imperceptible (+10-20µs) write latency - benchmarks, scale to WAL and replicas if needed.

How it works

- Use our CLI to add change triggers to selected Postgres tables (either directly or by dumping a

migration.sqlfile) - Define how these changes are transformed into analytics events in

pg_track_events.config.yaml - Run a Docker container that reads changes from an outbox table

schema_pg_track_events.event_log, processes them into analytics events, and forwards them to your desintations.

Quick Start

Add Triggers

Install the CLI

Initialize in your backend

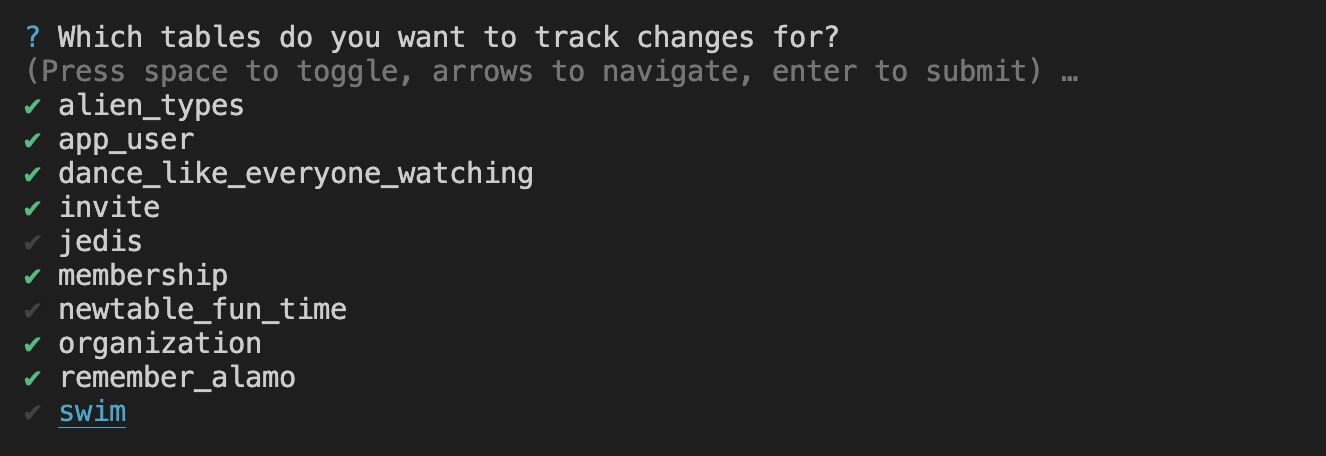

Select tables you wish to track

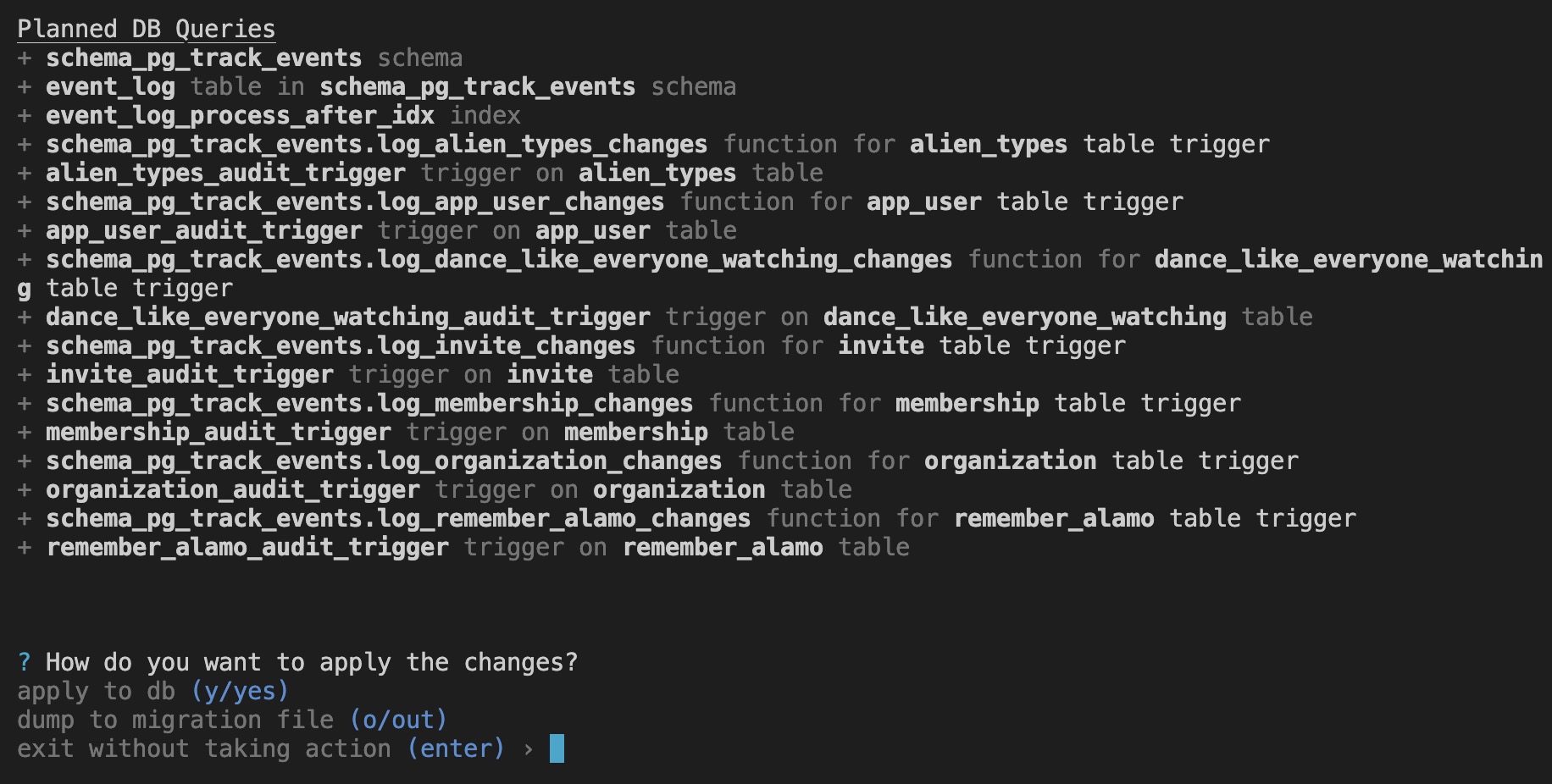

The CLI will output the triggers, functions and tables it plans to add.

Apply the changes

Directly to the database you have connected by typing (y/yes) or by outputting a migration file to review / apply the changes yourself (o/out).

| Note: All tables, functions and triggers are added to the schema_pg_track_events schema. If you ever want to uninstall the triggers and functions you can run pg_track_events drop or run DROP SCHEMA schema_pg_track_events CASCADE yourself.

Configure Tracked Events

Define analytics events and configure destinations in the pg_track_events.config.yamlfile. Full specification

Deploy a worker

Deploy a worker to your infrastructure. The Docker container created by init command should be ready to build and deploy, but you can customize it if you need to.

Watch the events flow!

License

MIT License

Created by @acunnife and @svarlamov to help them build better products.